Two separate studies have produced new image-recognition software that can describe photo content with far greater accuracy than before.

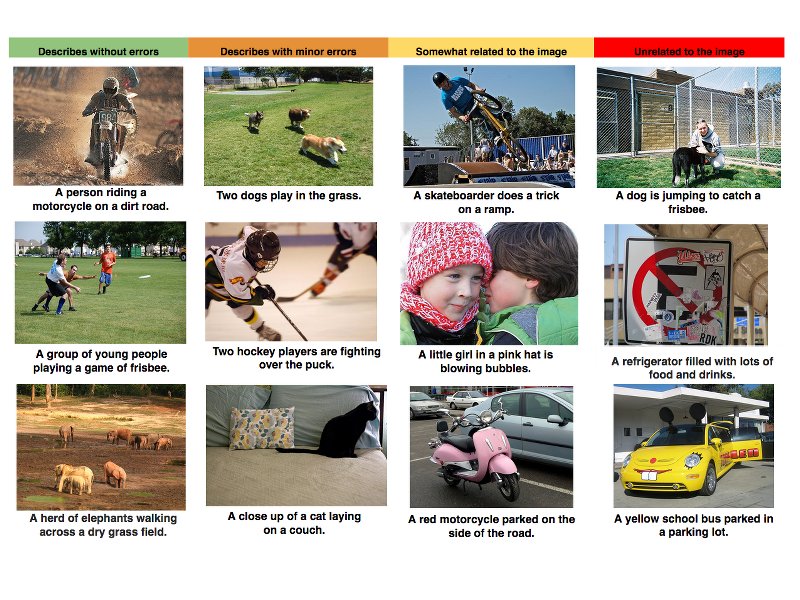

With examples showing how computers can almost reach the descriptive standards of humans, the new software teaches itself to describe entire scenes, rather than single items.

“We’ve developed a machine-learning system that can automatically produce captions to accurately describe images the first time it sees them,” claimed Google in a blog post yesterday.

“This kind of system could eventually help visually impaired people understand pictures, provide alternate text for images in parts of the world where mobile connections are slow, and make it easier for everyone to search on Google for images.”

As impressive as this is, the reality of the growing use of state surveillance around the world should raise significant concerns. As The New York Times notes, governments have been installing more and more cameras throughout the world, documenting their citizens’ movements. With advances such as this, how long before cameras can recognise live footage and begin predicting behaviour?

“As the datasets suited to learning image descriptions grow and mature, so will the performance of end-to-end approaches like this. We look forward to continuing developments in systems that can read images and generate good natural-language descriptions,” said Google.

Examples of Google’s new software, and its attempts to describe entire scenes

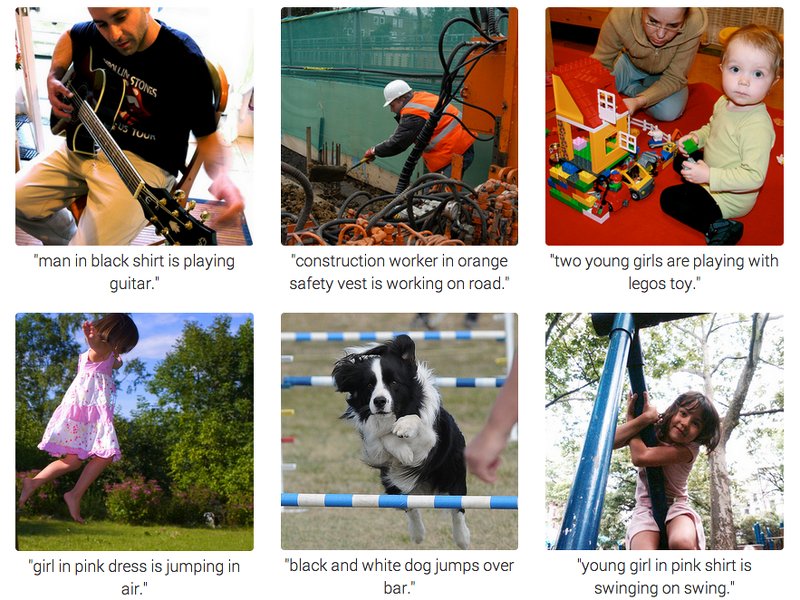

“I consider the pixel data in images and video to be the dark matter of the internet,” said Li Fei-Fei, director of the Stanford Artificial Intelligence Laboratory, who conducted the other research. “We are now starting to illuminate it.”

“Our model leverages datasets of images and their sentence descriptions to learn about the inter-modal correspondences between text and visual data,” reads the Stanford report.

Examples of Stanford University’s research into recognition software

Digital recognition of a human eye image via Shutterstock